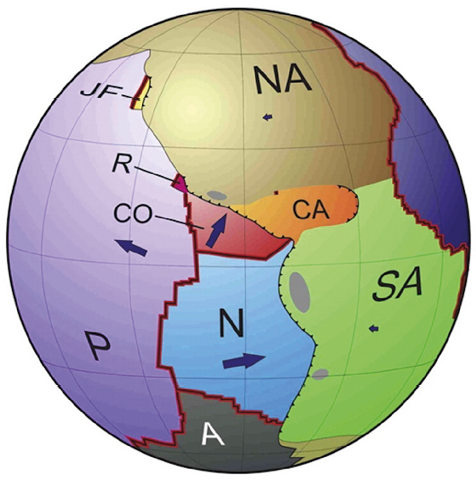

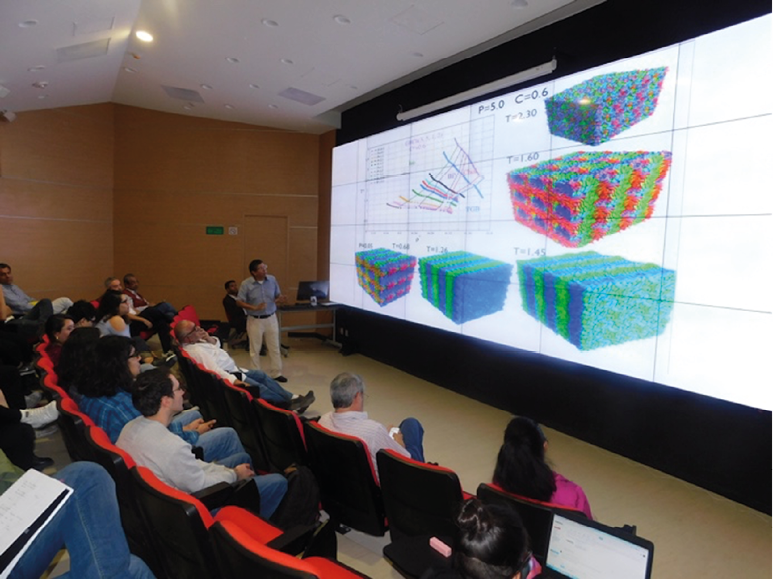

Figure 19. The Earth simulator spherical projection system at National Laboratory for Advanced Scientific Visualization (LAVIS) (www.lavis.unam.mx).

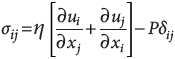

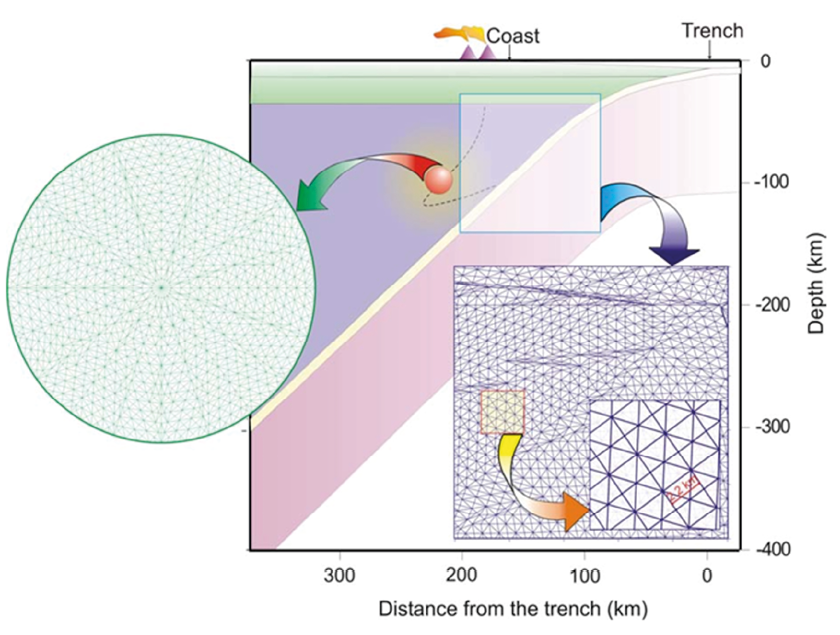

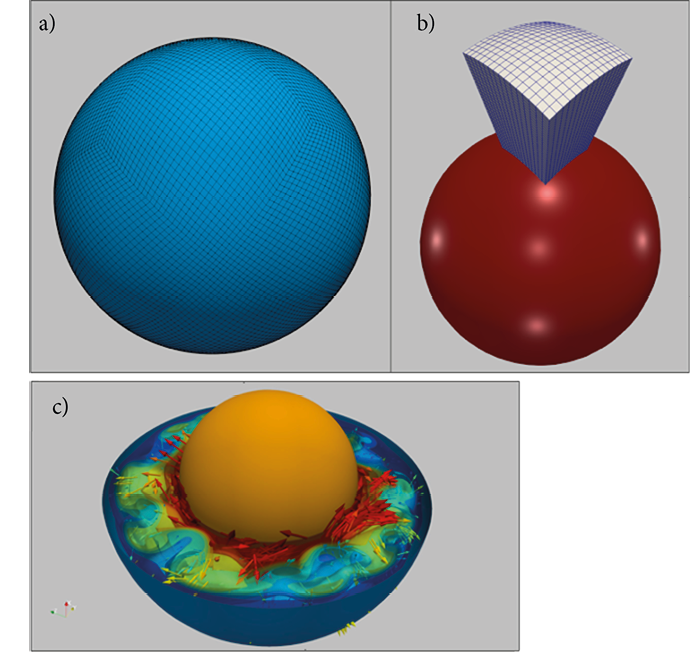

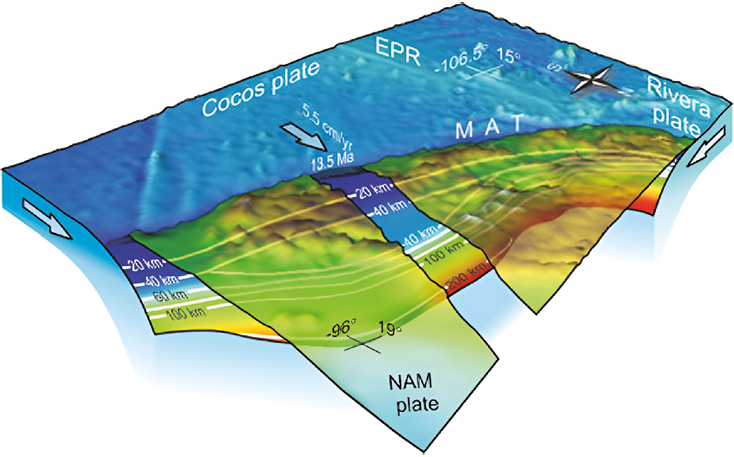

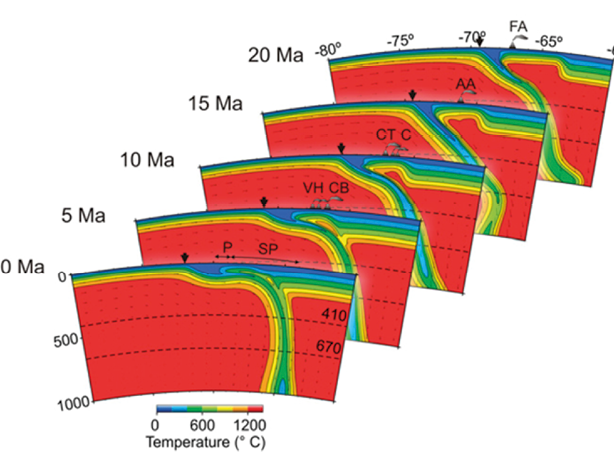

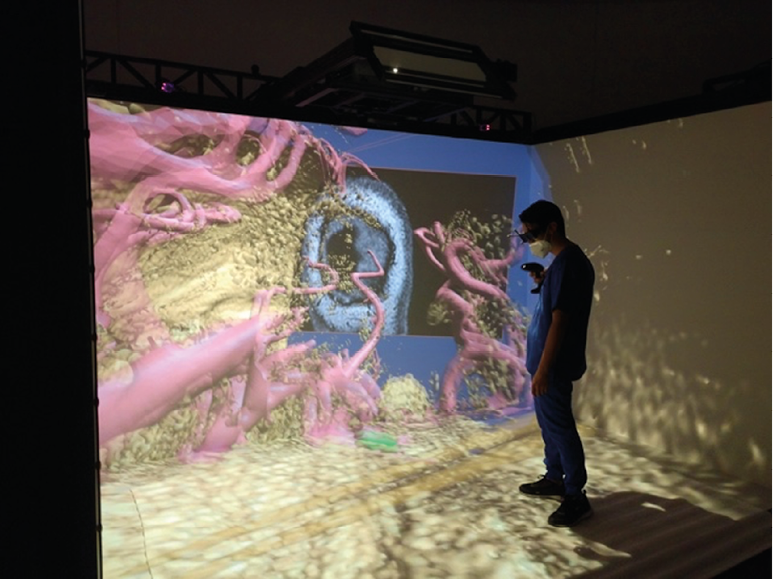

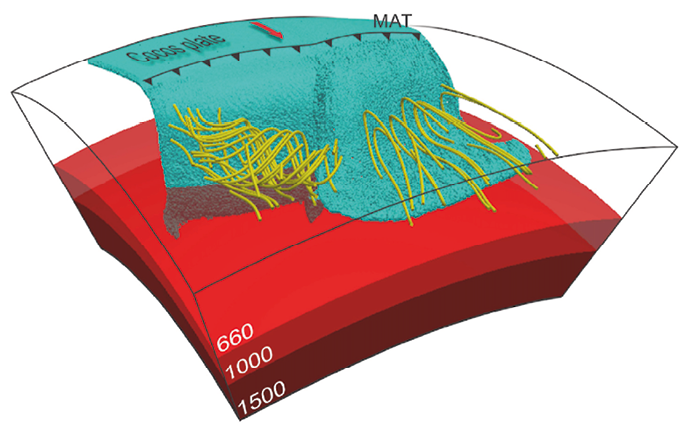

Virtual reality, massive data, and interactive research is rapidly growing and generating great expectations. At UNAM, Campus Juriquilla, future research will be to integrate the entire visualization and supercomputing infrastructure into an environment that allows researchers to work collaboratively, even when such users are geographically dispersed even on different continents. Users can process, share, discuss and interact with vast amounts of simulation data in real time, and LAVIS-UNAM has all the components to do this in the foreseeable future. Using this top computing infrastructure was used to produce high-resolution 3D numerical experiments specifically designed for the Mexican subduction zone. Moreno and Manea (2021) investigate the influence of a large continental block, the Chortis Block, on the evolution of subduction in Mexico and Central America. The results show realistic subduction geometries that are consistent with seismic tomography images for the region located beneath the North America Plate, where the Cocos slab is double flat, at shallow depths and in the mantle transition zone. Moreover, modeling results predict an upper mantle flow pattern consistent with seismic anisotropy observations (Figure 20), where for the Mexican subduction mantle shear wave anisotropy shows fast polarization directions oriented roughly in the direction perpendicular to the Middle America Trench (MAT). On the other hand, fast polarization directions are oriented mainly parallel to the MAT beneath Central America, which is consistent with modeling predictions (Figure 20).

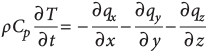

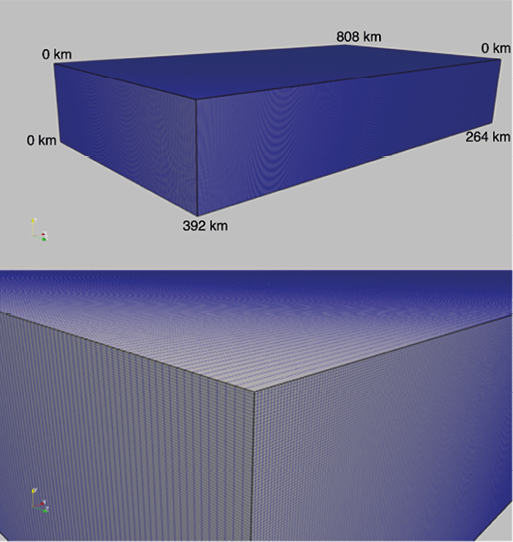

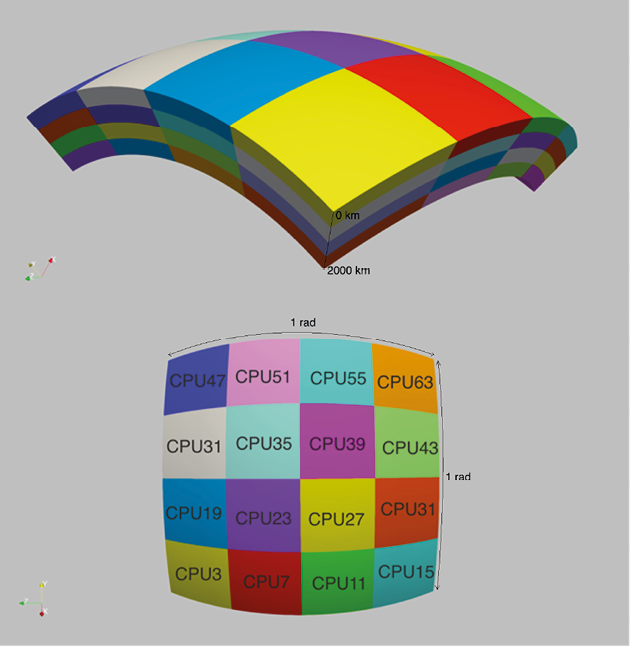

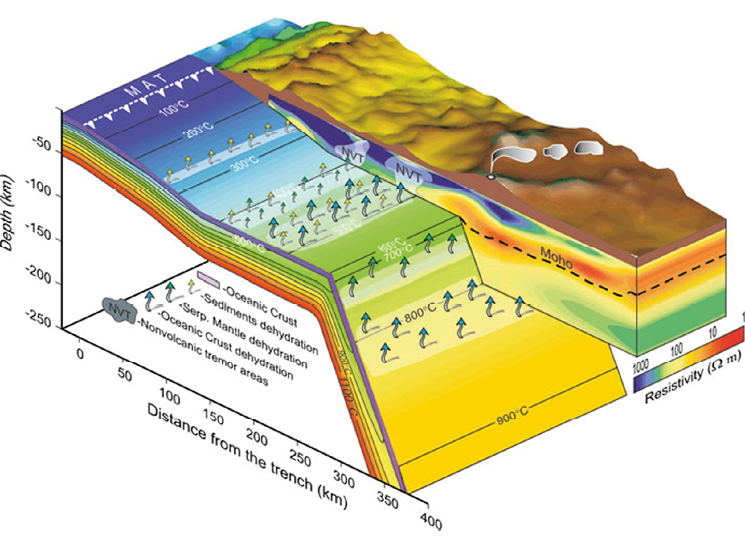

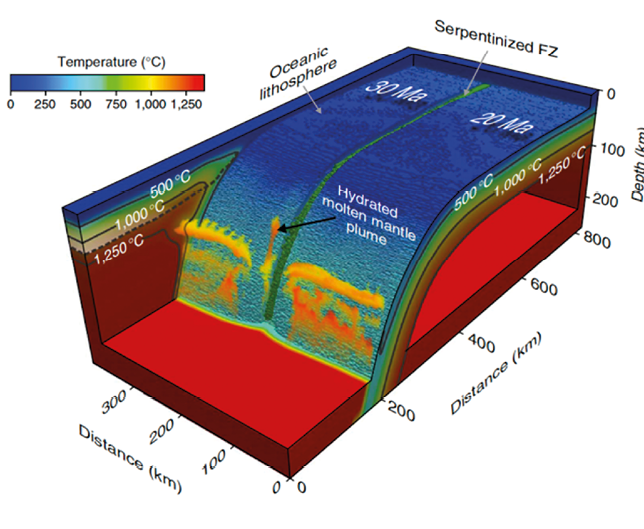

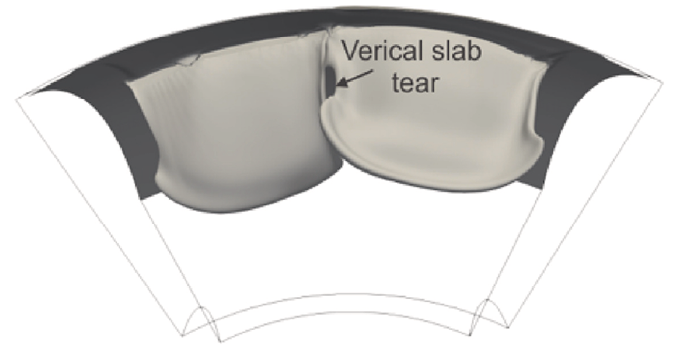

In the same line with the previous study, Nava-Lara and Manea (2022) took advantage of the LAVIS computing and visualization infrastructure and use 3D geodynamical time dependent regional kinematic models to investigate the origin of a possible vertical slab tear recently discovered beneath southern Mexico from seismological observations. Modeling results predict that vertical slab tearing is achieved only when a low viscosity linear discontinuity is introduced in the Cocos plate (Figure 21). This result is consistent with previous studies that proposed the existence of a mechanically weak zone in the oceanic lithosphere beneath the Tehuantepec fracture zone.

In this paper we review briefly the computational infrastructure developed at the Centro de Geociencias UNAM, Campus Juriquilla, in the last 15 years. It describes the history of the design and construction of Horus-HPCC, a parallel computing machine designed to perform large parallel simulations in different research fields specific for geosciences. We also show some of the main research results obtained using HPCC-Horus. In the end we present the current state of high-performance computing and visualization at the UNAM, Campus Juriquilla, UNAM, and explain some of the future trends for development in order to maintain the research at a high standard and solve frontier scientific problems.

Figure 20. 3D regional time-dependent numerical simulation performed with the parallelized finite elements code CitcomS (Tan et al., 2006) and shows the evolution of slab (shown as magenta-colored particles) beneath Southern Mexico and Central America (Moreno and Manea, 2021). Yellow tubes represent mantle flow streamlines. MAT – Middle America Trench. Visualization was performed with open-source software Paraview (www.paraview.org).

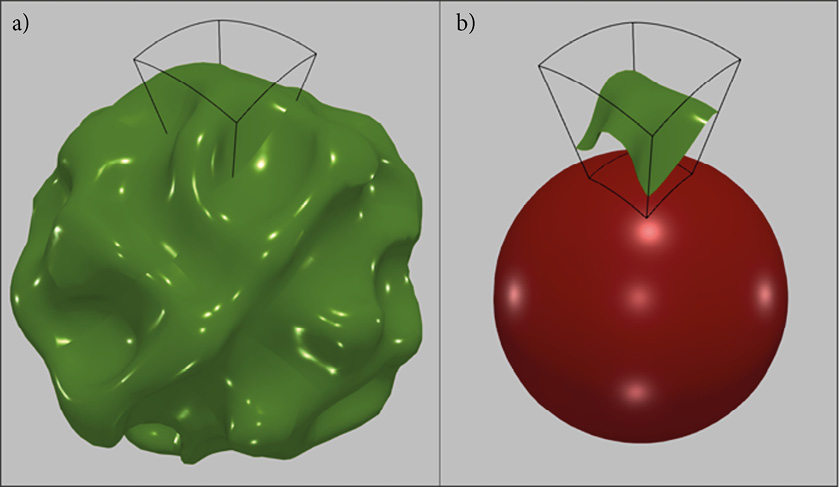

Figure 21. 3D regional time-dependent numerical simulation performed with the parallelized finite elements code CitcomS (Tan et al., 2006) and shows the formation of a vertical slab tear (shown as grey-colored temperature isosurface, Tadim.= 0.5) beneath Southern Mexico and Central America (Nava-Lara and Manea, 2022). Visualization was performed with open-source software Paraview (www.paraview.org).

ACKNOWLEDGMENTS

Numerical simulations presented in this review paper were generated using the Horus-HPCC at the Centro de Geociencias UNAM, and additional computational recourses from the National Laboratory for Advanced Scientific Visualization (LAVIS) at Universidad Nacional Autónoma de México (UNAM) (www.lavis.unam.mx) computing facility. This work received support from LAVIS software engineers Luis Alberto Aguilar Bautista, Alejandro de León Cuevas, and Alejandro Avalos. This work was partly supported by FY2021 Invitational Fellowships for Research in Japan (Long-term) and the UNAM-DGAPA-PAPIIT project grant IN114524. Figures were created using ParaView open-source software (version: ParaView 5.4.1, URL link: https://www.paraview.org/download/).

REFERENCES

Bandy, W., Taran, Y., Mortera, C., Kostoglodov, V., 2011, Geodynamics of the Mexican Pacific Margin: 10.1007/978-3-0348-0197-3.

Blue Brain Project, 2016, Tide: Tiled Interactive Display Environment: available at https://github.com/BlueBrain/Tide, query date 01/06/2023.

Bioli, F., Carrez, P., Cordier, P., 2017, Pure climb creep mechanism drives flow in Earth’s lower mantle: Science Advances, 3(3), DOI: 10.1126/sciadv.1601958.

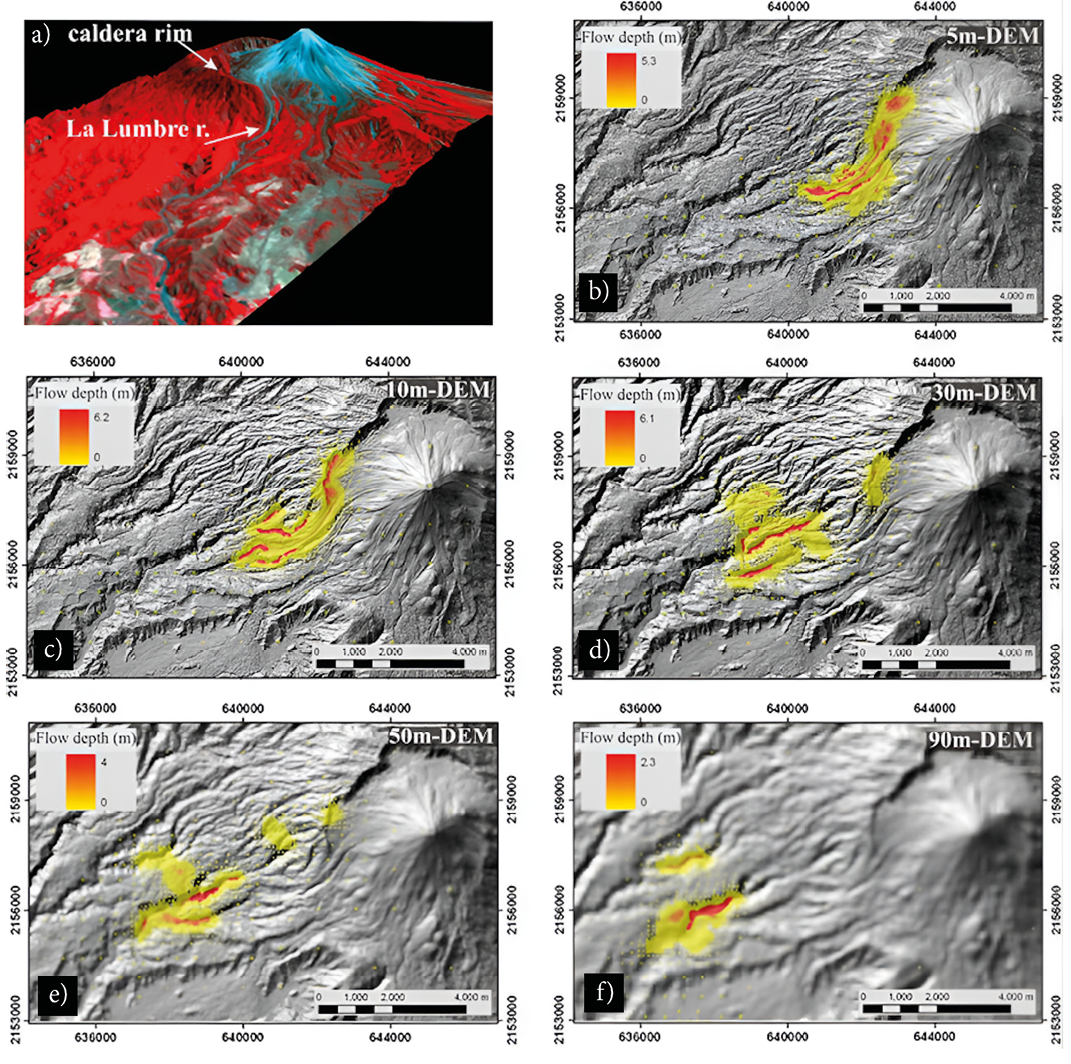

Capra, L., Manea, V.C., Manea, M., Norini, G., 2011, The importance of digital elevation model resolution on granular flow simulations: a test case for Colima volcano using TITAN2D computational routine: Natural Hazards 59, 665-680, https://doi.org/10.1007/s11069-011-9788-6.

Carter, N.L., Horseman, S.T., Russell, J.E., Handin, J., 1993, Rheology of rocksalt: Journal of Structural Geology, 15(9-10), 1257-1271, ISSN 0191-8141.

Clarke, L., Glendinning, I., Hempel, R., 1994, The MPI Message Passing Interface Standard: The International Journal of Supercomputer Applications, 8, doi:10.1007/978-3-0348-8534-8_21.

Clayton, R.W., Davis, P.M., Perez-Campos, X., 2007, Seismic structure of the subducted Cocos plate: Eos Transactions AGU, Joint Assembly Supplement 88(23), Abstract T32A-01.

Crockett, T.W., 1997, An introduction to parallel rendering: Parallel Computing, 23, 819-843.

Correa, W.T., Klosowski, J.T., Silva, C.T., 2002, Out- of-core sort-first parallel rendering for cluster-based tiled displays, in Bartz, D., Pueyo, X. Reinhard, E. (eds.), Proceedings of the Fourth Eurographics Workshop on Parallel Graphics and Visualization, The Eurographics Association, 89-96.

Espíndola, J. M., Macías, J. L., Tilling, R.I., Sheridan, M.F., 2000, Volcanic history of El Chichón Volcano (Chiapas, Mexico) during the Holocene, and its impact on human activity: Bulletin of Volcanology, 62, 90-104, https://doi.org/10.1007/s004459900064.

Ferrari, L., 2004, Slab detachment control on mafic volcanic pulse and mantle heterogeneity in Central Mexico: Geology, 32, 77-80, 10.1130/G19887.1.

Ferrari, L, Orozco-Esquivel, T., Manea, V.C., Manea, M., 2012, The dynamic history of the Trans-Mexican Volcanic Belt and the Mexico subduction zone, Tectonophysics, 522-523, 122-149, ISSN 0040-1951, https://doi.org/10.1016/j.tecto.2011.09.018.

Gerya, T., 2009, Introduction to Numerical Geodynamic Modelling (1st ed): Cambridge, Cambridge University Press, 358 pp, doi:10.1017/CBO9780511809101.

Gerya, T., 2019, Introduction to Numerical Geodynamic Modelling (2nd ed.): Cambridge, Cambridge University Press, 484pp, doi:10.1017/9781316534243.

Gerya, T.V., Yuen, D.A., 2007, Robust characteristics method for modeling multiphase visco-elasto-plastic thermo-mechanical problems: Physics of the Earth and Planetary Interiors, 163, 83-105.

Gomez-Tuena, A., LaGatta, A., Langmuir, C., Goldstein, S., Ortega-Gutierrez, F., Carrasco-Nunez, G., 2003, Temporal control of subduction magmatism in the eastern Trans-Mexican volcanic belt: Mantle sources, slab contributions and crustal contamination: Geochemistry, Geophysics and Geosystems, 4(8), 8912, DOI 10.1029/2003GC000524.

Gurnis, M., Hall, C., Lavier, L., 2004, Evolving force balance during incipient subduction: Geochemistry Geophysics Geosystems, 5, Q07001.

Hirth, G., Kohlstedt, D.L., 1996, Water in the oceanic upper mantle: implications for rheology, melt extraction and the evolution of the lithosphere: Earth and Planetary Science Letters, 144, 93-108.

Hirth, G., Kohlstedt, D.L., 2003, Rheology of the upper mantle and the mantle wedge: A view from the experimentalists: Washington DC, American Geophysical Union Geophysical Monograph Series, 138, 83-105, doi:10.1029/138GM06.

Humphreys, G., Hanrahan, P., 1999, A distributed graphics system for large tiled displays: IEEE Visualization, 215-224.

Jackson, M., Hudec, M., 2017, Salt Flow, in Salt Tectonics: Principles and Practice: Cambridge, England, Cambridge University Press, 28-60.

Jödicke, H., Jording, A., Ferrari, L., Arzate, J., Mezger, K., Rüpke, L., 2006, Fluid release from the subducted Cocos plate and partial melting of the crust deduced from magnetotelluric studies in southern Mexico: Implications for the generation of volcanism and subduction dynamics: Journal of Geophysical Research, 111, B08102.

Karato, S.-I., 2013, Physics and Chemistry of the Deep Earth: Chichester, West Sussex, UK, John Wiley & Sons, Ltd, 416 pp.

Kohlstedt, D., 2007, Properties of Rocks and Minerals – Constitutive Equations, Rheological Behavior, and Viscosity of Rocks, 389-417, doi:10.1016/B978-044452748-6/00043-2.

Li, Z., Miyafuji, S., Wu, E., Kuzuoka, H., Yamashita, N., Koike, H., 2019, OmniGlobe: An Interactive I/O System For Symmetric 360-Degree Video Communication, in Proceedings of the 2019 Designing Interactive Systems Conference (DIS '19): San Diego California, USA, ACM Digital Library, 1427-1438, https://doi.org/10.1145/3322276.3322314.

Manea, M., Manea. V.C., 2011, Flat-slab thermal structure and evolution beneath central Mexico: Pure and Applied Geophysics, 168, 1475-1487.

Manea, V.C., Manea, M., Kostoglodov, V., Sewell, G., 2005, Thermal models, magma transport, and velocity estimation beneath southern Kamchatka. in Foulger, G.R., Natland, J.H., Presnell, D.C., Anderson, D.L. (eds.), Plates, Plumes and Paradigms: GSA Special Paper, 388-31, 517-536, doi: 10.1130/0-8137-2388-4.517.

Manea, V.C., Pérez-Gussinyé, M., Manea, M., 2012, Chilean flat slab subduction controlled by overriding plate thickness and trench rollback: Geology, 40(1), 35-38.

Manea, V., Leeman, W., Gerya, T. Manea, M., 2014, Subduction of fracture zones controls mantle melting and geochemical signature above slabs: Nature Communications, 5, 5095, doi:10.1038/ncomms6095.

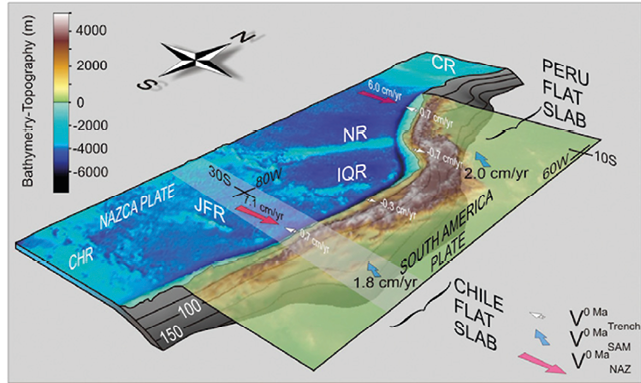

Manea, V.C., Manea, M., Ferrari, L., Orozco-Equivel, T., Valenzuela, R., Husker, A., Kostoglodov, V., 2017, A review of the geodynamic evolution of flat slab subduction in Mexico, Peru, and Chile: Tectonophysics, 695, 27-52, doi:10.1016/j.tecto.2016.11.037.

Manjrekar, S., Sandilya, S., Bhosale, D., Kanchi, S., Pitkar, A., Gondhalekar, M., 2014, "CAVE: An Emerging Immersive Technology -- A Review", in UKSim-AMSS 16th International Conference on Computer Modelling and Simulation: Cambridge, UK, IEEE Xplore, 131-136.

McNamara, A.K., Zhong, S., 2004, Thermochemical structures within a spherical mantle: Superplumes or piles?: Journal of Geophysical Research: Solid Earth, 109 (B7), B07402.

Molnar, S., Cox, M., Ellsworth, D., Fuchs, H., 1994, A sorting classification of parallel rendering: IEEE Computer Graphics and Applications, 14(4), 23-32.

Moreno, J.S., Manea, M., 2021. Geodynamic evaluation of the pacific tectonic model for Chortis block evolution using 3D numerical models of subduction, Journal of South American Earth Sciences, 112, Part 2, 103604, ISSN 0895-9811, doi: 10.1016/j.jsames.2021.103604.

Moresi, L., Zhong, S., Han, L., Conrad, C., Tan, E., Gurnis, M., Choi, E., Thoutireddy, P., Manea, V.C., McNamara, A., Becker, T., Leng, W., Armendariz, L., 2014, CitcomS v3.3.1: Zenodo, available at <https://zenodo.org/records/7271920>, software, doi:10.5281/zenodo.7271920.

Nava-Lara, S.V., Manea, V.C., 2022, Numerical models for slab tearing beneath southern Mexico and northern Central America: Journal of South American Earth Sciences, 115, 103771, ISSN 0895-9811, doi: 10.1016/j.jsames.2022.103771.

Oeser, J., Bunge, H.P., Mohr, M., 2006, Cluster Design in Gerndt, M., Kranzlmuller, D. (eds), the Earth Sciences-Tethys: Berlin Heidelberg, Germany, Springer-Verlag, HPCC, LNCS 4208, 31-40.

Pardo, M., Suárez, G, 1995, Shape of the Subducted Rivera and Cocos Plates in Southern Mexico: Seismic and Tectonic Implications: Journal of Geophysical Research, 100, 12357-12373.

Patra, A., Bauer, A., Nichita, C.C., Pitman, E.B., Sheridan, M.F., Bursik, M.I., Rupp, B., Webber, A., Stinton, A.J., Namikawa, L., Renschler, C., 2005, Parallel adaptive numerical simulation of dry avalanches over natural terrain: Journal of Volcanology and Geothermal Research, 139, 1-21.

Payero, J., Kostoglodov, V., Shapiro, N., Mikumo, T., Iglesias, A., Perez-Campos, X., Clayton, R., 2008, Non-volcanic tremor observed in the Mexican subduction zone: Geophysical Research Letters, 35, L07305.

Pérez-Campos, X., Kim, Y.H., Husker, A. Davis, P., Clayton, R., Iglesias, A., Pacheco, J., Singh, S., Manea, V.C., Gurnis, M., 2008, Horizontal subduction and truncation of the Cocos Plate beneath Central Mexico: Geophysical Research Letters, 35, 10.1029/2008GL035127.

Pitman, E.B., Nichita, C.C., Patra, A., Bauer, A., Sheridan, M.F., Bursik, M.I., 2003, Computing granular avalanches and landslides: Physics of Fluids 15(12), 3638-3646.

Strak, V., Schellart, W.P., 2021, Thermo-mechanical numerical modeling of the South American subduction zone: A multi-parametric investigation: Journal of Geophysical Research: Solid Earth, 126, e2020JB021527, doi:10.1029/2020JB021527.

Syracuse, E.M., Abers, G.A., 2006, Global compilation of variations in slab depth beneath arc volcanoes and implications: Geochemistry Geophysics Geosystems, 7, Q05017.

Tan, E. Choi, E., Thoutireddy, P., Gurnis, M., Aivazis, M., 2006, GeoFramework: coupling multiple models of mantle convection within a computational framework: Geochemistry, Geophysics, Geosystems, 7, Q06001, 14 pp.

Turcotte, D.L., Schubert, G., 2002, Geodynamics (2nd ed): Cambridge, UK, Cambridge University Press, 456 pp.

Zhong, S., Zuber, M.T., Moresi, L., Gurnis, M., 2000, Role of temperature-dependent viscosity and surface plates in spherical shell models of mantle convection: Journal of Geophysical Research: Solid Earth, 105(B5), 11063-11082.